Finetuning is so back

Some of you may be wondering, “Wait, when did finetuning go away”? The answer is that, for a year or two now, people have been brainwashed to not touch finetuning. Frontier labs have been saying “we recommend that you rely on our models getting better, cheaper, and faster”. This is pretty much sending the same message as “don’t even try to finetune”. See this video from Anthropic’s devrel as an example. The underlying facts (better, cheaper, faster) have been mostly true, but it’s always beneficial to get the full picture. Moreover, people are taking this narrative to the extreme:

Managers going around cancelling finetuning projects within companies since “frontier models will improve”.

People are claiming that even the engineering time to build software is wasted since “frontier models will improve” and they will just write all the engineering glue needed with a prompt.

Many founders hesitant to start new ventures since “frontier models will improve” and one prompt will do the job of one company.

When I speak to folks in the industry, these are some reasons I usually get, beyond the ones above.

We don’t know how to finetune.

Finetuning is expensive.

We tried and it didn’t work.

It’s all changing now. Feels like we are at an inflection point here, with models actually not improving the rate at which they were improving and finetuning becoming more and more accessible, and the awareness also increasing. And oh RL is also playing a role. People are now running into these problems and asking if finetuning makes sense:

I have tried RAG and prompting ad nauseam and I can’t make things work well.

I don’t want to send all my data to the model providers.

It’s too expensive! Can I distill the capabilities I need into a smaller model?

I am fed up of rate limits and chasing the providers.

The model I was using got deprecated and all my prompts are useless now.

I am getting all this feedback, but there is no systematic way of using it. How can I use it?

AI is very important to me and so I don’t want to outsource my AI strategy!

So let’s start.

1. Closed models are improving, but slowly

Let’s take an objective look at the pace of model improvement. The word “improving” itself is subjective, so let’s see if we can break it down further into quality, cost, latency.

Quality

Yes, the quality of the models is improving overall.

For many models, this is measured on benchmarks, and of course, as you may expect where I am going, real-world is a different story. OpenAI was painting the picture of AGI recently. But that was just before they raised a large round. Last I checked, we don’t have AGI yet! And in fact, GPT-4.5 felt a bit like disappointment (though it does seem like a solid model). Anthropic seems to be losing the race, with some people complaining about sonnet-3.7 regressing (but I think it’s solid). Gemini is doing well!

Overall, we need to cut through the hype, and the models are improving slowly but steadily.

Cost

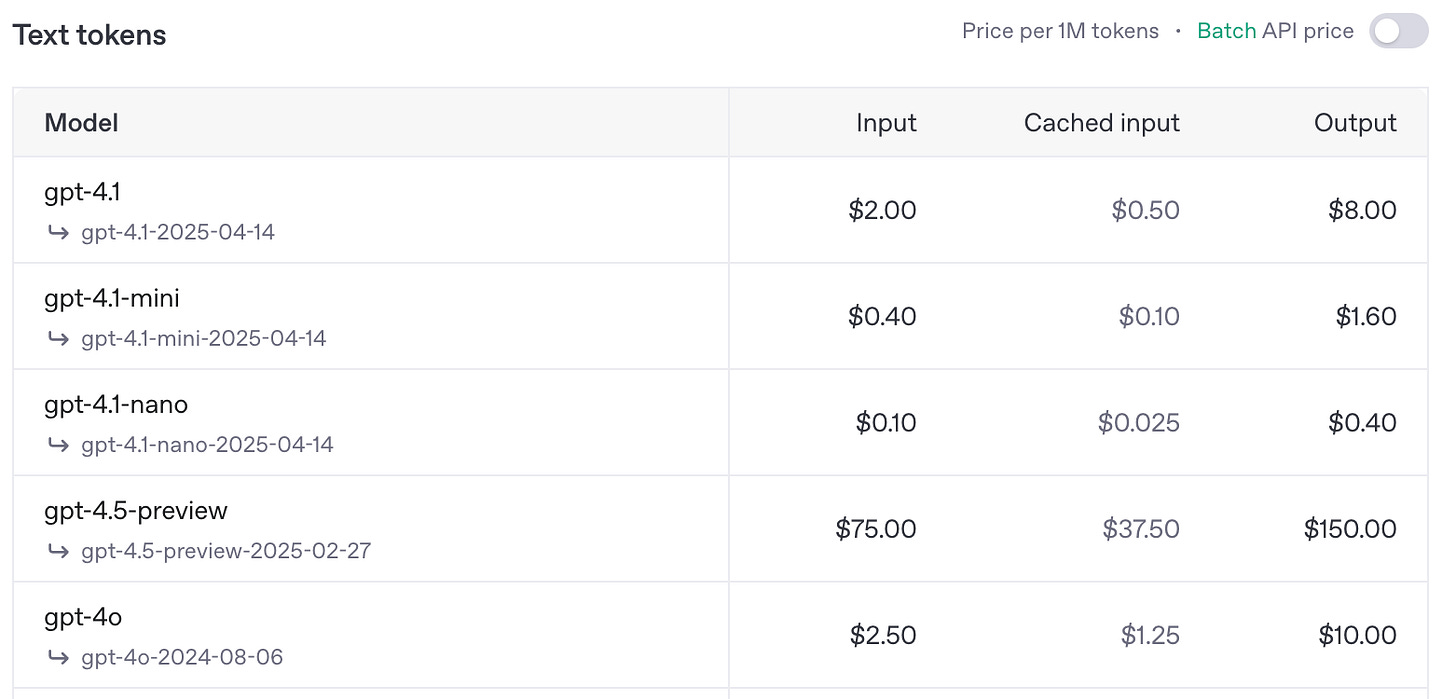

The popular claim that everyone believes is that models are becoming cheaper, and to the extent that people think they will be free. How is OpenAI supposed to make any money?

The models are becoming cheaper mainly because of venture money and the need to capture market share. Google has large coffers. OpenAI is bleeding money in the billions.

Remember that GPT-4.5 was not cheap at all.

And as people adopt more and more of AI, their costs are going up.

Latency

The latencies depend not only on the type of model, but also the load. With the reasoning models, the latencies are a lot longer.

A mental model

You can think of the model’s capabilities like a sphere that’s gradually becoming larger and larger, as it learns to do more things. At the end of the day, models are approximating the data they train on. For most of the fancy capabilities you see, the models were trained on similar data. So these spheres are becoming larger and larger because they are seeing more and more data.

But the world has infinite amount of data. The models just can’t learn everything. There will be a point after which it doesn’t make sense to make it learn every single thing, due to cost and latency issues.

At that point (or even before), we are going to want to start specializing, rather than try to generalize.

2. Open-source models are pretty good

The open-source models are constantly improving as well. We have a great set of open-source models, like Llama, Qwen, DeepSeek, and OpenThinker, which is our reasoning model.

And in fact, the closed labs have open-sourced some good models as well, like Gemma from Google, and we should see something from OpenAI as well soon.

In general, there is and there will be a gap between closed models and open-models (with the open ones lagging behind). But the real power of open models is that they can be customized and finetuned.

Closed labs hate this one technique

Distillation is the process by which you take the knowledge from a larger teacher model and use that to improve a smaller student model.

And if you are smart about things, you can actually make the student model better than the teacher! For example, you can bring in some of your intuition, or use additional data you have etc.

When we trained Bespoke-MiniCheck-7B (a model that specializes in detecting a special kind of hallucinations), we used data from Llama3.1-405B, and our finetuned/distilled model was not only better than Llama3.1-405B, but also better than the frontier models.

3. Where is finetuning useful?

Prompt hell

People are stuck in prompt hell. They have a series of prompts and they can’t get the system to do what they want it to do. So they tweak something here and something there, and hope that it helps. They add to the prompts things like “Please follow all the instructions otherwise my cat will fall sick”.

Sensitive data

Sometimes, you just don’t want to send your data outside your perimeter. This is either due to what you believe in, or due to regulations.

Cost and Latency

With the magic of distillation and careful finetuning, you can create much smaller models for the tasks you care about. Smaller models have lower costs and lower latencies. And also more portable.

Frontier labs use this widely, so why shouldn’t the rest of the world use it?

Owning AI

AI is so critical but it’s being outsourced away to the large model providers. There are similarities between what’s happening here and what has happened to manufacturing in the US.

Train or be trained on

Bulk of your business logic is captured by the data you own and the evals you specify. If you give both away, it’s possible to train a model that is going to mimic your business logic.

Over time, we are going to see that the frontier labs are going up the stack and owning more and more of different business logics.

4. RL is showing the way

Reinforcement learning (RL) finetuning feels like magic. In the research community there is a lot of interest around this, because it improves reasoning of models, which is essential for building good agents. And it needs very little data.

For example, we recently showed that with RL finetuning on 100 examples, we are able to improve the tool calling abilities of a small language model. Supervised finetuning would have required a lot more examples, but this is besides the point. The point is that model customization works better than prompting.

The important part here is that RL is able to make use of the feedback and actually incorporate it (most of the time, people typically ignore feedback when prompting).

Here’s what we had written in the blog post, which is illustrative of things that are illustrative of the future:

Manual prompt engineering for building agents is easy to start with, but doesn’t scale well. To begin with, the list of tools may be long and diverse. Moreover, a prompt engineer designing the agent has to spend increasing amounts of time to cover more and more edge cases with handcrafted rules through trial-and-error. Such a manual approach is reminiscent of what Rich Sutton warned against in his essay, The Bitter Lesson:

"1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning"

5. Finetuning is so back!

I see more and more people talk about finetuning, and asking us how to use RL for improving agents. People are realizing prompting is not good for their health.

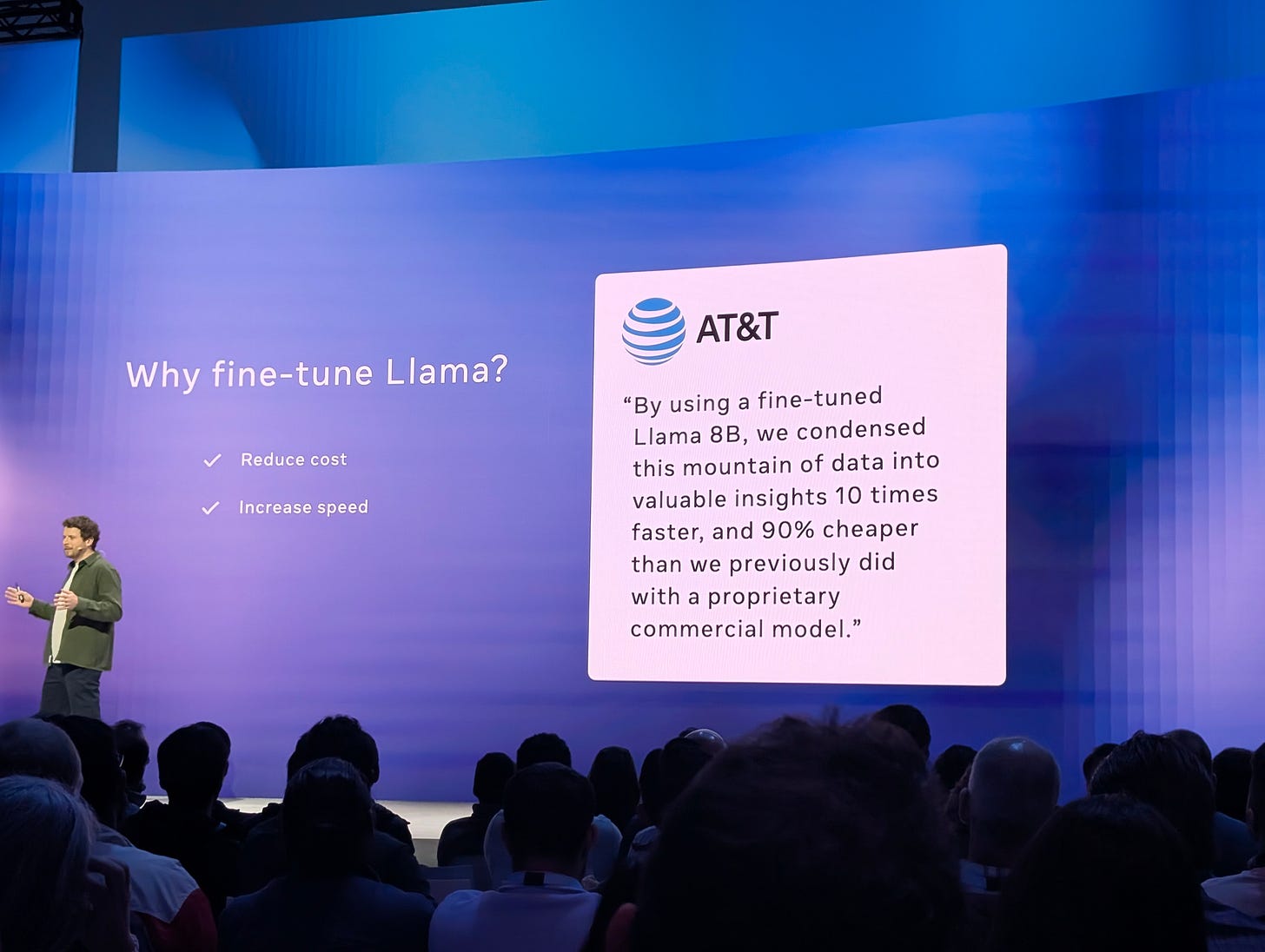

At llamacon, finetuning was a big theme: they gave several examples of success stories and also launched a finetuning service.

We have heard from finetuning providers that they are seeing an upward trend in the finetuning adoption and there are various anecdotes in the wild about finetuning becoming important.

Prompting is so over. Finetuning is so back.